When is it appropriate to use Generative AI and Large Language Models? What are the ethical dimensions and how can we consider the contributory effects on the climate crisis? We’ve been thinking about it a lot. It’s definitely a work in progress.

Our (current) AI usage policy

I founded Cog before the world wide web existed; email wasn’t a thing, phones were fixed to copper cables and nobody used the words ‘media’ and ‘social’ in the same sentence.

I’ve watched on as many new technologies have been touted as game-changing or creativity-killing. Most have gently faded or been easily assimilated. But AI does seem different and recent events have made us focus more keenly on its potential to revolutionise how we work, and on the implications to our lives and our planet.

Whatever our opinions, we cannot ignore that Artificial Intelligence will be ubiquitous. Currently it’s a novelty. Soon it will become the norm (and we’ll all stop waving the term as if it’s a magic wand).

At Cog we’ve, fairly quickly, had to form opinions about appropriate use, the ethical dimensions and the contributory effects on the climate crisis.

We’ve had to brief our team and write a ‘usage’ policy.

As we’ve done that work I thought I may as well share our thinking, all with the caveat that this technology is moving so fast, we might need to rewrite the policy very soon.

Context

Artificial Intelligence is an unhelpful, Terminator-esque, catch-all term so it’s useful to further define what we mean.

As the scientists will tell you, AI technology is entirely benign – like a bullet or maybe a self-replicating human pathogen. It’s only when we start using it, and release it into the general population, that it has significant consequences.

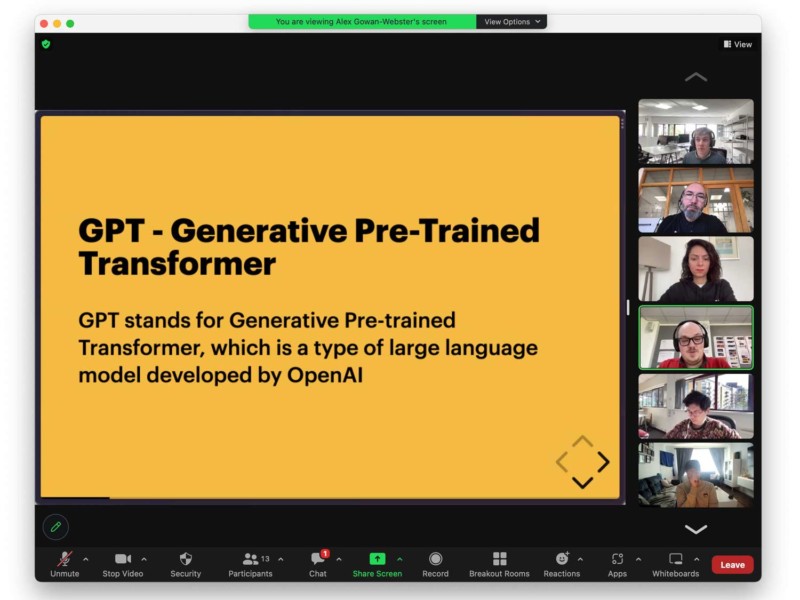

When most of us talk about AI, we’re actually talking about the interfaces that have been built to interact with Generative AI and specifically Large Language Models (LLMs).

In this context Artificial Intelligence is neither destroyer nor saviour, it is just another term for very big maths.

LLMs use unimaginable amounts of data to ‘train’ systems to guess answers (outputs) to questions (inputs).

They are only as good as the data sets that lie behind them and so it is in the interest of those companies to find more and more data to feed into the machines.

Clever interfaces turn the data into everyday language so it appears to humans as if we are asking questions and retrieving answers.

Actually, the system, at least initially, also guesses all the wrong answers. It compares all potential answers against the data available, gradually jettisoning the most ‘wrong’ and narrowing towards the most ‘right’ answer. The interfaces are also designed to appear confident in their guesses and to fill in gaps in their ‘knowledge’ (the available data).

Based on our interaction it ‘learns’ whether it has guessed ‘right’. And it saves the best guesses as a starting point for next time.

To squidgy brained humans, who cannot process information that efficiently, this can feel like intelligence or sometimes magic.

ChatGPT was the first interface to jump into public consciousness but there are many others racing to the market. Gemini and DeepSeek are the two that have forced our hand.

Our recent history

- As part of the creative community, we’ve long since been aware of the issues of big companies building data-trawling into their T&Cs. We’ve always disabled any of our software platforms (I’m looking at you Adobe and Figma) from harvesting our data to train their AI models.

- Last year I had to abandon the process of recruiting an intern when I realised that all of the 100+ applications had been written, wholly or in part, using ChatGPT. It was impossible to fairly assess one candidate against another so I had to give up.

- Many members of the Cog team have individually been using AI to provide prompts and short-cuts to laborious tasks such as summarising long documents or providing different ways of approaching a coding conundrum. But we haven’t adopted specific tools company-wide.

- In the past few weeks, every app and software platform seems to have offered a ‘magic’ button to build AI into their workflows (for a price). We’ve been stoically resisting. But it can be tempting to see what is possible by pressing that shiny button.

These all seemed like isolated and manageable scenarios.

However, last week we noticed that, seemingly without notification, all our Google accounts had been enabled with Gemini. That forced our hand and we’ve had to properly consider the implications for us as a company and of course for the wider society that is affected by our actions.

This morning, DeepSeek’s launch crashed the share prices of its competitors with a low-cost Chinese alternative to the Silicon tech-bro offerings. That feels like it’s shifting the goal-post of this game-changing technology.

The implications of us using AI tools

It’s probably beyond our remit to think about the broader implications of sentient automatons and the singularity, and that really isn’t the level of AI we are concerned with (at the moment).

But we should all be aware of our actions and at least be mindful of the consequences of those actions in choosing to engage with AI and LLMs.

From our perspective there are four key areas to consider…

1. Ownership and privacy

We need to remember that AI systems need data and examples to learn from. Originally those examples were generated by humans and often those examples are being ’trawled and harvested’ without the permission of the humans who created them.

In the creative sector, original thinking is currently highly valued. But much of that work is being harvested (with or without our consent) to create systems that will replicate our work and potentially render our roles redundant.

We might not care that AI systems have scanned every photo we have ever uploaded to the internet, in order to mimic human-like features when generating new images – after all, we gave permission for that when we ticked the T&Cs on the app we use. Right?

But we might be upset if we were a novelist whose lifetime of work has been unknowingly harvested, and now an AI generator can write a novel in our style, in seconds.

Or we might be devastated that the decade we have spent learning coding has been harvested and assimilated into a program that can deliver a reasonable looking website within a few keystrokes.

Or that we have devoted our career to considered design and all of that work has been trawled, reworked and delivered via an algorithm that churns out logos for ‘free’ (on a site that is selling us crypto currency and fat-busting tablets).

We might be even more disturbed if our private notes or intimate photos were accidentally exposed to an AI bot, and that content appeared, largely un-edited elsewhere.

Or if family photos were repurposed to advertise a far-right political party, or our children’s images appeared in deep fake pornography.

2. Impact on global politics

AI needs lots of hardware to run on.

Almost all the hardware needed for almost all computing relies on precious metals and rare minerals, mined in some of the poorest, most vulnerable and politically unstable pockets of the world. The world’s superpowers are vying to secure the rights to these commodities and human beings are caught in the middle of it all.

And around 90% of the world’s most advanced semiconductors are produced in Taiwan, a country that is considered a breakaway province by China, with all its military superiority and decades of rhetoric about its tiny neighbour.

Maybe we can’t do anything about that situation but we need to at least realise why over reliance on these platforms can make us vulnerable to global politics, and our collective action can contribute to conflict.

3. Impact on the climate catastrophe

The combined impact of data processing for AI is utterly terrifying. Predictions are warning that the ‘AI industry could use as much energy as the Netherlands’ in two years.

The world is littered with tens of thousands of acres of server farms each devouring energy and generating heat that all needs to be cooled and stabilised with water. And, because of the economics, server farms are often sited in deserts where water is the most precious of resources.

All AI systems require immense processing power. The technology is, at least currently, a vastly inefficient technology. The energy needed to ‘train’ a new model is so huge that it is hard to even quantify.

Just using the technology massively ramps up our energy consumption: A recent study at Cornell, concluded that a Generative AI system might use 33 times more energy than machines running task-specific software. Even a simple search engine task can use 10 times more energy by using AI. That is a massive ramp up.

We spend a lot of our time, quite rightly, perfecting code and tweaking performance so that our websites use the minimal amount of energy. What’s the point if you are then going to use a vastly inefficient Generative AI system to provide the content?

4. Equity and discrimination

Any AI system is only as good as its data input and the objectiveness of its programming. We’ve seen multiple examples of LLMs producing racially-biased results or results skewed by gender or from an able-bodied, neurotypical or heteronormative perspective.

It is important that we take the time to consider the huge strides made by society towards equity and equality (and the distance still to be covered). AI has the potential to both enhance that approach but also to ignore perspectives that are outside of the ‘norm’ of its dataset, unless we build in safeguards and human overrides to prevent that.

Our (current) policy:

At Cog we are cautious adopters, weighing up the pros and cons of privacy, ethics and sustainability.

We need to consider and be considerate in our use of this exciting new technology. As more platforms start introducing and pushing versions of them we need to make decisions about how and when we ‘buy in’ to that approach.

- We are human-centred and very aware that we are paid for our skills, expertise and professionalism. We are happy to use AI tools to augment and enhance our work but not to replace it. We encourage the innovative use of AI alongside a continuous drive to improve our human-centric knowledge and unique abilities.

- We will continue to block creative software platforms, such as Adobe and Figma, from trawling our content or accessing our work. We will continue to be vigilant and prevent that trawling from happening (even by stealth) on any platform. We accept that we cannot practically prevent it happening on social media so we are careful about the content we upload. We don’t currently attempt to block any trawling of public content on our own website, or on client websites.

- We are aware of the myriad of issues around Generative AI (such as MidJourney or other image creation models) and intellectual property. We avoid using Generative AI for creating visual content – we want creative people to be paid fairly so we will continue to commission and credit illustrators, photographers, writers and other creative content creators.

- We remember that client data is intellectual property and we would never share our client’s property in open ways. We respect data in the same way.

- We never input anything confidential into public AI tools (such as Chat GPT or Bard online). This includes client data, sensitive business information and ideas, passwords, API keys or anything else that could be considered sensitive or private.

- We might use closed internal systems to perform specific tasks, using sensitive data, but only when we are satisfied that the system is not externally accessible and only if it is strictly necessary to a client project.

- If we run a task we ensure that we also end that task and do not leave processes running in the background that could be accidentally exposed to external systems.

- We always encrypt any sensitive data that might be used across an accessible network that could be intercepted.

- If we are in any doubt about whether something is sensitive, we run it by our Head of technology or our line manager before using the tool.

- We appreciate that AI can be useful for summarising non-sensitive or publicly available content, coming up with ideas, writing drafts and generating examples. We use it for that purpose. But AI should never be used to create final versions of documents, especially if shared externally or paid for by clients.

- When using the by-products of AI (including any large language model), we are responsible for the final output of the work. AI generated content should always be sense-checked and fact-checked by us before being shared – we are personally responsible for ensuring that happens.

- We are open about our use of tools when we use them, for example if we use Google’s AI meeting notes we should make that clear and get their consent before proceeding. If we use results in a document, we should cite the source: “when I asked ChatGPT what the average audience numbers would be, it gave me this result…”

- We choose and use the most efficient tool for the job, not just the most readily available. We weight energy use more highly than other factors when deciding on the ‘best’ tool. We might use AI to summarise large documents into bullet point notes but we would not use it for simple arithmetic when calculating a budget.

- We should all feel empowered to raise any issue or share any concern with our line manager.

- We are aware that AI is a term used to summarise a rapidly evolving and ever-shifting landscape of tools. We will be aware, watchful and responsive to changes as they happen, and we will adapt this policy to suit.

This policy was first published in January 2025.

![The Manikins – a work in progress [with spoilers]](https://cogdesign.com/wp-content/uploads/2024/09/Jack-Aldisert_Lens-Flare-800x600.jpg)