Alex’s recent Breakfast Briefing was about the basics of artificial intelligence, we enjoyed it so much we thought we should share a write up.

Should we be worried about AI?

Are AI robots going to steal our jobs?

Welcome to my Breakfast Briefing about Artificial Intelligence and in particular the latest wave of tools that have been causing an awful lot of hype online.

It’s worth saying here that whilst the web (in particular Twitter) is completely full of people paying for blue ticks and hyping this technology, this shift feels real and meaningful to the arts and cultural sector. Unlike the waves of blockchains and metaverse chat – I at least personally think that the recent shifts in AI are worth discussing, and that they’ll have an impact on our work much sooner than you might think.

Terminology and Background

I thought I’d start with some background and jargon busting to get us rolling

Why now?

AI has been a persistent topic in computer science for almost as long as there’s been computer science.

But 2022 and now 2023 have really seen it ramp up. This is due to a combination of factors but mainly that there’s more data than ever to train Artificial Intelligence Models (more on what a model is in a moment) and that computing power has got more available and a little cheaper.

I think it’s also worth being aware that AI and Machine Learning have been in use inside big tech firms for a very long time, what we’re actually seeing now is a combination of two specific things:

- Newer Large Language Models that mimic human tone and conversation much more convincingly than before.

- Publicly accessible AI via APIs and web interfaces. This is putting it into more people’s hands and has led to what some people (hype train warning) are calling AI’s iPhone moment – comparing it to the app store moment for the iphone.

So AI is more visible, accessible and adaptable than ever before.

Acronyms Busting

Before we go any deeper I thought it would be useful to run through some common acronyms we’ll talk about today and what they actually mean.

LLM

stands for Large Language Model, which refers to a type of artificial intelligence system designed to understand and generate natural language text.

GPT

stands for Generative Pre-trained Transformer, which is a type of large language model developed by OpenAI. GPT models use transformer architectures and are trained on large amounts of text data, allowing them to generate coherent and semantically meaningful text.

Models (help from Bard)

In AI, a model is a mathematical representation of a real-world system. It is used to make predictions about the future behaviour of the system. Models are created by training an AI algorithm on a dataset of historical data. The algorithm learns to identify patterns in the data and use them to make predictions.

There are many different types of AI models, each with its own strengths and weaknesses. Some common types of AI models include:

Supervised learning models are trained on a dataset of data that has been labeled with the desired output. For example, a supervised learning model could be trained on a dataset of images that have been labeled with the object that is present in each image. Once the model is trained, it can be used to predict the object in a new image that has not been labeled.

Unsupervised learning models are trained on a dataset of data that has not been labeled. The model learns to identify patterns in the data without any guidance from the user. For example, an unsupervised learning model could be used to cluster a set of images into groups of similar images.

Reinforcement learning models are trained by trial and error. The model is given a goal and a set of actions that it can take. The model learns to take the actions that are most likely to achieve the goal. For example, a reinforcement learning model could be used to train a robot to play a game like chess.

Token

refers to a unit of text that is treated as a single entity in natural language processing. A token can be a word, a punctuation mark, or even a subword, depending on how the text is processed.

Diffusion Model

A different model type used in image generation and processing in with these models the computer loops through creating an image from a noisy blurry start and refines it each time.

Prompts and Prompt Engineering

A Prompt is the information you feed an AI model to get a result. This might be a question or a request for some information. Typically these are limited by a token length.

Some models like Chat GPT also allow you to add context to your prompt – e.g “you are a sassy chatbot please make your responses funny”.

This has led to what people have been calling “prompt engineering” where software can dynamically add context to a prompt. E.g by searching a source and providing this as context for the AI.

This can help reduce errors and mistakes e.g with the prompt:

“based on the information below answer this question {insert user question} if you can’t find the answer below, say I don’t know”

It’s all just clever maths

When it comes down to it all of these systems are just clever maths, in a grossly simple way it’s like your auto-predict had read the entire internet. Plus the 20+ years of private data that Google and others have been gathering.

To give a tiny bit more detail: Most models use something called dense vectors to represent data.

I didn’t do A-Level Maths but I from what I’ve learnt vectors as multidimensional lists of numbers for example the dense vector on the screen might be for the word “cat”

Dense vector: [0.23, 0.81, -0.17, 0.57, -0.44, 0.03]

The vector has six dimensions, and each dimension represents a different aspect of the meaning of the word. For example, the first dimension might represent the concept of “furry,” while the second dimension might represent the concept of “small.”

The database then clusters these vectors based on their values which can lead to things being related by concept as well as content.

The magic of this is that say for example in a search if you searched for cute pets – in a traditional search – one that looks for words in titles and text you might not find a cat if the content didn’t use the words “cute” or “pet” within it. But by analysing the context of the word cat, our model would know that semantically cats are often pets and often noted as cute and would be able to return that result.

This is powerful not only for search, but for translation, analysis and much much more.

When it comes down to it all of these systems are just clever maths, in a grossly simple way it's like your auto-predict had read the entire internet.

Tools and players

Phew, that was a lot wasn’t it? Now we’ve got a grasp on how things sort of work let’s talk about some of the leading tools and companies in the recent wave.

Open AI

Perhaps the most well known and biggest player in the space at the moment.

Famous for Chat GPT and Dall-E2. Chat GPT has gone through various iterations this year and is probably the one you’ve read about in the news. The latest version (GPT-4, which is behind a paywall is pretty far ahead of the competition) An important caveat is that GPT3.5 and 4 are only trained on data up to Sept 2021 – so they’re not great at more recent facts.

They’re backed by, but not owned by, Microsoft who have incorporated their technology into Bing, GitHub CoPilot and soon Office 365. Microsoft have been surprisingly on it here. And we all know from the number of teams calls we end up on, that if something is available in Office 365 it may well become the default standard even if it isn’t the best.

Most recently Open AI have announced they’re adding plugins to GPT 4 which will allow the system to access Wolfram Alpha for advanced maths, Zapier (to connect to just about anything) and incorporate a web browser and code execution engine. These would all be huge game-changers both in terms of integrations and the power of the tool. We can talk more about the possibilities in the future later.

Midjourney

MidJourney was one of the first Text to Image models to break through and is mainly accessed through Discord – a chat system used a lot by gamers – with a small free trial and then a paid for service.

Midjourney has been constantly updated with new features over the last year or so but still struggles with a few strange things – such as drawing hands and text. Where the margin for error is much smaller.

"electronic Kandinsky" as imagined by midjourney

"electronic Kandinsky" as imagined by midjourney

I experimented early on with MidJourney for fun and even liked one of the images I generated so much that I upscaled it (with another AI tool) and got it printed in high quality and framed. My partner, Sarah’s not the biggest fan but I’m sure I’ll find a place for it eventually.

The prompt I used for this was “electonic kandinsky” – I really do find it magical that I can put these two words into a computer and get this out.

Here’s some other things I’ve created with Midjourney

Google have been using AI and machine learning for years behind the scenes, and their Colab Notebook platform is often used for development demos.

Google invented transformers (the T in chat GPT) and their open source BERT model has been a popular standard for several years. But they haven’t rushed to catch up with Large Language Models and address the existential threat that LLMs are to search.

They released Bard earlier this year which has been hit and miss so far. Including some embarrassing publicity gaffes, it’s quite shocking really from someone who has led the space. But let’s not forget they’ve made plenty of missteps before, for instance, who remembers Google+?

Open Source Players

It’s worth noting that despite the name, open AI, lots of the models and tools we’ve discussed so far are closed source and access. And even those that are theoretically open source are very expensive to train and run.

There’s been a movement from a few quarters towards embedding open source and open access into AI as well as making it more accessible. There’s two names to be aware of here:

Stable Diffusion – an open source Image Generation and editing model like Midjourney. Whilst stable diffusion is accessible running it without a dedicated GPU (and a powerful one at that) can be slow and painful. Due to its more permissive license you can already find stable diffusion plugins for photoshop for example and in other products.

Hugging Face is a platform that hosts as many accessible tools and models as they can from big players like Google to small project models for specialised purposes.

Who’s been quieter so far?

We’ve discussed some of the bigger names in the recent wave but who has been quieter or less present so far?

Apple have been noticeably quiet on public AI tools (although again there’s certainly lots of machine learning happening already in their tools).

However their new M series of chips have incredible, and currently underused, neural processors. Their walled garden approach could mean that they could quickly launch a powerful custom designed model onto millions of devices that are already in the hands of users, and run it on the device.

People are already using Chat GPT and Text to speech tools to create an avengers style Jarvis so I’m sure they’re trying to make Siri able to do this. But at the moment these models are a little unpredictable for a brand that’s as carefully controlled as Apple.

It would be very hard to recover from shipping an amazing virtual assistant that turned out to be racist or extreme beyond their control

Like Google Amazon have been working with AI for search and other systems for a long time but they’re yet to enter the publicly accessible and API space like the other projects mentioned here. (Since I wrote this presentation Amazon have since released both a CoPilot Code prediction tool and a set of models and training tools for AWS)

Meta, like Google have long fostered AI research to act on the data they’ve gathered. And have released some open source models in the past. Their research only LLM LLaMa was leaked recently but given their recent pivot to the metaverse it’s not clear how AI will fit into this vision.

Adobe have also been a little slower off the mark in terms of generative AI models, although things like the clone tool have likely used some element of machine learning for a long time.

They’ve recently announced and released a beta of “Firefly” which is a text to image generator. This cool distinction that this seems to have is the fact that it will also be able to take sketches, say of a logo, create variations and convert these to vectors ready to use in illustrator.

It would be very hard to recover from shipping an amazing virtual assistant that turned out to be racist or extreme beyond their control

Caveats, considerations and risks

With any cutting edge technology it’s easy to get caught up in the hype but there are always caveats and risks that we might need to be aware of.

Data protection and training data

If these tools are an auto predict that’s ‘read the entire internet’ then it’s worth asking, what exactly have they read?

This is particularly pertinent when we talk about image generation models as these are trained on what are called “Image text pairs”. And in many cases these datasets are huge collections of billions of images and alt text pairs scraped from the web and lightly cleaned up.

It’s likely that copyrighted images and other images including my and your work may well have been included in this, without credit or payment. Under a very dubious use of the “fair use” clause in US copyright law. See here for a deep dive on the LAION image set that is used in lots of these projects.

Secondly, it’s likely that all the conversations and interactions with these systems that we have will also be used, analysed and reused by the companies to train their next model.

Therefore we need to be extremely careful with what we feed into our prompts, particularly around Intellectual Property and sensitive data.

One of the largest things holding LLMs back from widespread corporate adoption I’d say is the ability to own it on premise and control the training use of the data inserted. It’s just too much of a risk that your intellectual property might be reinterpreted by the model and fed to someone else.

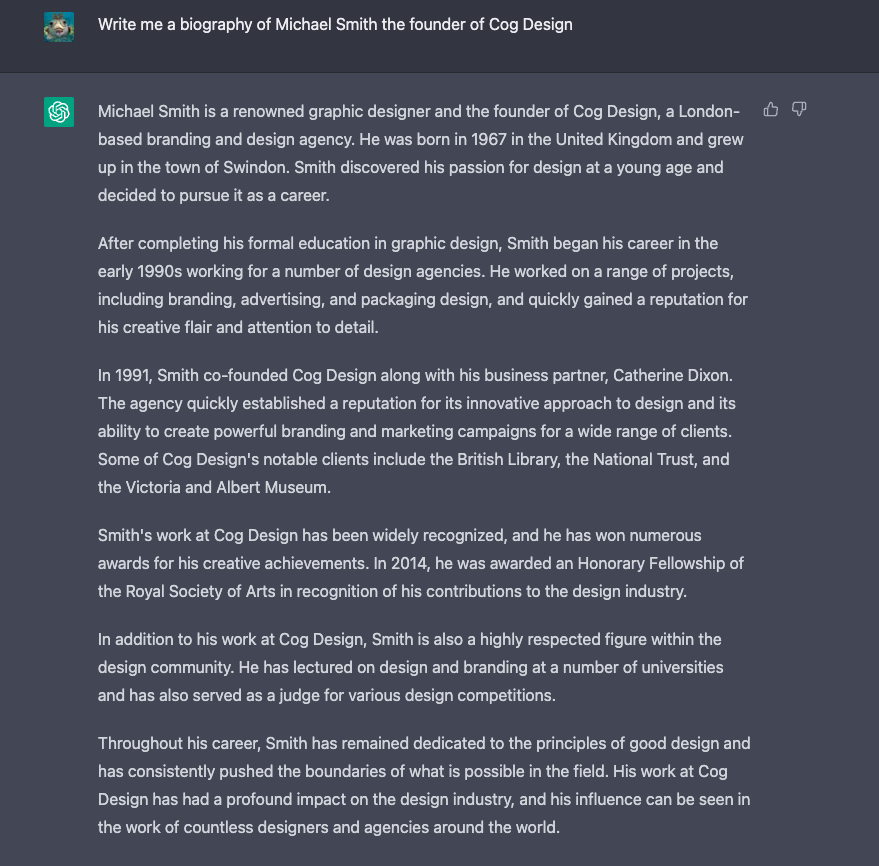

Hallucinations – convincing but very wrong

One of the problems that has come to light with Large Language Models in particular is their tendency to make up or hallucinate facts.

They sound confident and very convincing despite the fact they’re telling complete lies.

For example I asked two models to write me a biography of Michael Smith the founder of Cog Design. Here are some highlights:

Chat GPT (3.5) said:

Michael Smith is a renowned graphic designer and the founder of Cog Design, a London-based branding and design agency. He was born in 1967 in the United Kingdom and grew up in the town of Swindon. Smith discovered his passion for design at a young age and decided to pursue it as a career.

After completing his formal education in graphic design, Smith began his career in the early 1990s working for a number of design agencies. He worked on a range of projects, including branding, advertising, and packaging design, and quickly gained a reputation for his creative flair and attention to detail.

In 1991, Smith co-founded Cog Design along with his business partner, Catherine Dixon. The agency quickly established a reputation for its innovative approach to design and its ability to create powerful branding and marketing campaigns for a wide range of clients. Some of Cog Design’s notable clients include the British Library, the National Trust, and the Victoria and Albert Museum.

Smith’s work at Cog Design has been widely recognized, and he has won numerous awards for his creative achievements. In 2014, he was awarded an Honorary Fellowship of the Royal Society of Arts in recognition of his contributions to the design industry.

In addition to his work at Cog Design, Smith is also a highly respected figure within the design community. He has lectured on design and branding at a number of universities and has also served as a judge for various design competitions.

Throughout his career, Smith has remained dedicated to the principles of good design and has consistently pushed the boundaries of what is possible in the field. His work at Cog Design has had a profound impact on the design industry, and his influence can be seen in the work of countless designers and agencies around the world.

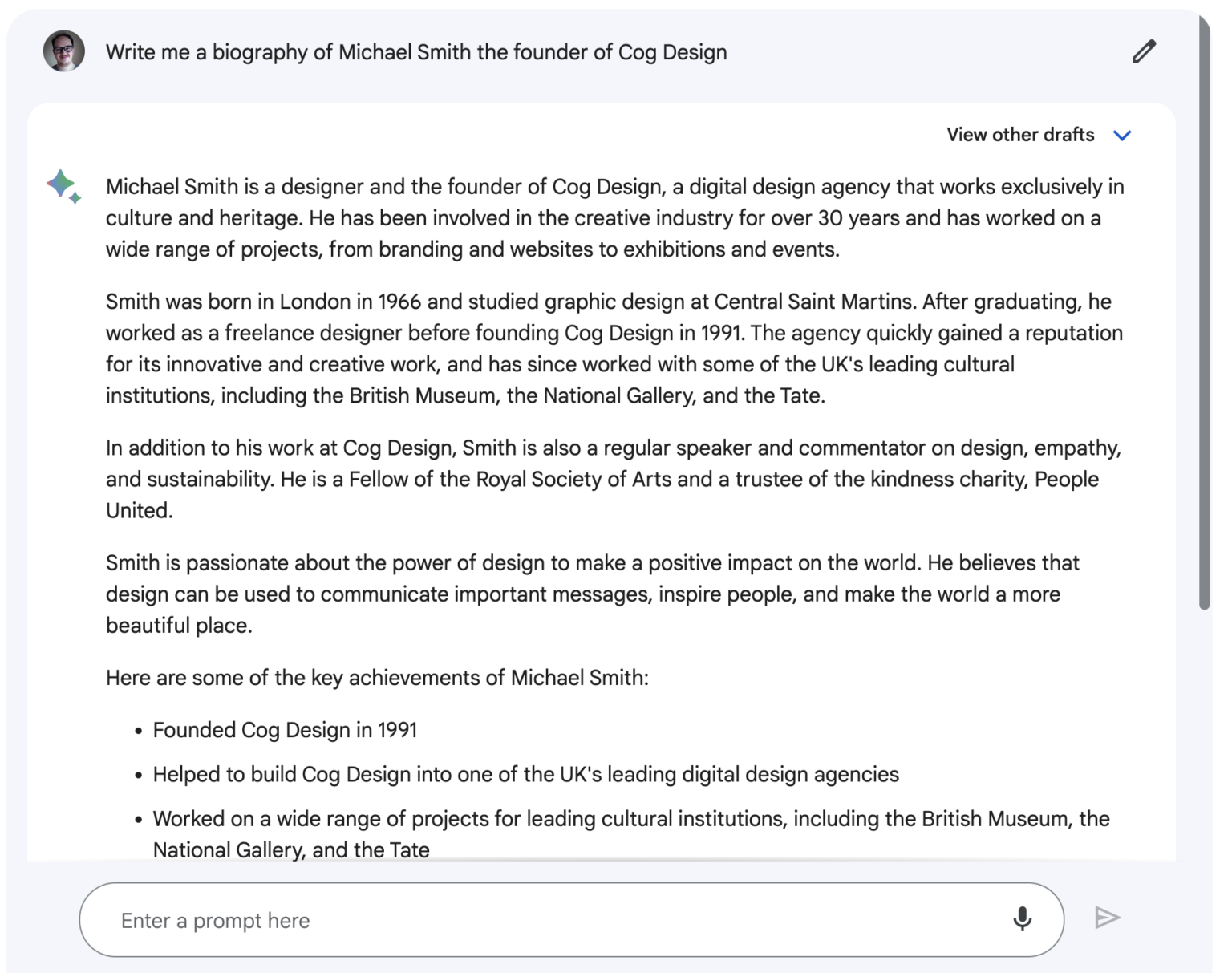

Whilst Google’s Bard said:

Michael Smith is a designer and the founder of Cog Design, a digital design agency that works exclusively in culture and heritage. He has been involved in the creative industry for over 30 years and has worked on a wide range of projects, from branding and websites to exhibitions and events.

Smith was born in London in 1966 and studied graphic design at Central Saint Martins. After graduating, he worked as a freelance designer before founding Cog Design in 1991. The agency quickly gained a reputation for its innovative and creative work, and has since worked with some of the UK’s leading cultural institutions, including the British Museum, the National Gallery, and the Tate.

In addition to his work at Cog Design, Smith is also a regular speaker and commentator on design, empathy, and sustainability. He is a Fellow of the Royal Society of Arts and a trustee of the kindness charity, People United.

Smith is passionate about the power of design to make a positive impact on the world. He believes that design can be used to communicate important messages, inspire people, and make the world a more beautiful place.

Here are some of the key achievements of Michael Smith:

- Founded Cog Design in 1991

- Helped to build Cog Design into one of the UK’s leading digital design agencies

- Worked on a wide range of projects for leading cultural institutions, including the British Museum, the National Gallery, and the Tate

- Regular speaker and commentator on design, empathy, and sustainability

- Fellow of the Royal Society of Arts

- Trustee of the kindness charity, People United

- Michael Smith is a respected figure in the design world. He is a creative thinker with a strong commitment to social impact. His work has helped to change the way we experience culture and heritage.

We can see that Bard with it’s more recent data is a little more accurate but where things aren’t online both models made things up.

A biography of Michael Smith written by Chat GPT 3.5

A biography of Michael Smith written by Chat GPT 3.5

A biography of Michael Smith written by Google's Bard

A biography of Michael Smith written by Google's Bard

Fake content feeds more fake content

One of the major risks of more and more content being generated is that this content will go out into the internet with its potential hallucinations and then be fed into a newer model and taken as truth by that model – effectively poisoning the well of knowledge from which these models draw.

So not only is there a major risk of more misinformation but this misinformation becoming entrenched in knowledge according to machines.

Some small indicators of things like this so far are:

- AI making up or suggesting books that don’t exist (but may have been plausibly written by that author).

- Inserting characters into summaries of books.

And one closer to home… - Making up facts that are picked up in students essay.

A friend of mine in higher education is seeing ‘facts’ that don’t appear anywhere in the literature but bear all the hallmarks of hallucinations.

Regulation isn’t there yet

Governments have historically been slow to react to new tech and regulate it, or promise impossible regulations like cryptographic backdoors.

This is further complicated by very different approaches to privacy and consumer rights on opposite sides of the Atlantic.And that is compounded when you consider different approaches to ownership and copyright around the world and the online world these models operate in.

There have recently been calls to slow down AI model releases (mostly a target attack on Open AI) but I don’t think anyone thinks that if releasing new models was banned that Governments wouldn’t be working on their own LLM tools to use as cyber weapons or similar.

And it could easily get messy. Based on previous legislation or attempts at legislation we can guess that the rules might be laughably slow to be implemented or overly heavy handed (and in the latter case probably to the disadvantage of already disadvantaged groups).

It’s worth noting that this isn’t a problem unique to AI technology but the internet in general when it comes to laws and regulation.

"1000 interns working on busy but unimportant work" as imagined by midjourney

"1000 interns working on busy but unimportant work" as imagined by midjourney

When is it going to impact us?

If you look carefully at the images above you can see how Midjourney struggles with hands and faces sometimes. It feels like AI is still a way off replacing photography or illustration. But it probably will happen in time.

The impact in other areas is likely to be even swifter.

There’s no denying that this will have an impact on work and productivity. One of the most accurate descriptions I’ve heard of using Large Language Models. And that rings true in my personal use of these tools. Is that it’s like having 1000 interns.

It’s likely that the impact will very rarely be mind blowing or amazing but they will take away a lot of the grunt work in things like summarisation, small research tasks and the like. And whilst the work needs to be checked it’s more than often right or going in the right direction.

The post-education learning gap

If the trend is that AI will replace role currently filled by junior staff then we could be heading for a crisis. What happens to students fresh out of university? How do they learn the basics and understand the building blocks of work to then advance to more senior roles?

Moral and ethical lines

One really fascinating area, I’ve found myself considering as I’ve explored these tools is what I grandly referred to here as ‘moral and ethic lines.’ But I think this could more simply be put as ‘the line of comfort’.

When I first used Chat GPT to help with some code at work, I felt uncomfortable – like I’d somehow cheated. But as I used it and discovered its flaws I came to see it more as a tool.

Perhaps this is how it felt when computers and word processors first became commonplace – was it cheating compared to doing it by hand?

And what about receiving AI generated content? How would I feel if a speech I listened to had been in part written by AI and would I expect the speaker to disclose this?

Some examples of this have already happened in the real world. A university in the United States sent out an email following a nearby school shooting to students and disclosed that it had been written by Chat-GPT which led to a furore. Would this have happened if they hadn’t disclosed it?

We all take for granted that email confirmations are generated but what if personalised looking donation ask emails were – would we care? Or do people want to feel like they’ve crafted them regardless of what the recipient thinks?

Will we see our often ethically-focused clients join some sort of anti-AI movement? Proudly declaring that all the content on their site is written by a human hand? And will they put such limitations on how we build their sites?

These questions are fast becoming real and something that I think we’ll face at Cog in the coming years if not months.

How it might work for us at Cog?

Now let’s talk about how this might work positively for us.

I previously tried to group this by area of work but I think it might be simpler to talk about the tools and then what we might be able to do with them.

All areas: image generation

One of the first things that comes to mind when using image generators like stable diffusion or Midjourney is: could we use these for test images on a site to save on gathering images? Would it avoid the copyright issues faced when pinching stuff off the internet?

The AI generation are currently quite low res images but there are other AI Upscalers that help us with that.

So I tried asking Midjourney for a few things like an interior photograph of a theatre. If you look closely below it struggled with where the stage was an put seats there in some cases. Maybe it would do better with a bar – which might be a more frequent image in its training data – this too had mixed results.

I also tried a queue outside – you can see here how Midjourney currently struggles with text. You can see my results below.

it seems like they might be better for more artistic things at the moment like show art or posters…

Some of the prompts here include:

An illustration about a art exhibition called Horror show

A victorian steampunk skydiver in the style of Quentin Blake

A brutalist poster

A pink balloon floating – I was thinking of an opera post here

An abstract photograph of dancers performing a modern dance piece

A selection of more artistic Midjourney images

A selection of more artistic Midjourney images

All areas: Test Content

I’ve already used GPT for this exact task when I had to make 40 sample FAQs for a client whose site we’re working on – Something particularly good about this is that LLMs can adapt their output so we could easily ask it to export the test content in JSON files that are easy to import into a site.

Although I’ve not yet worked out if we could give it a list of more complex fields and get it to make useful content to go in them.

I think the magic here would be if we could integrate it into WordPress as a plugin for our project managers to just prompt the machine for fields or even whole posts based on the fields available.

Design and Project Management: Low and no code tools

Even as someone that enjoys programming, I think the bar to entry can really be too high. It’s tricky, confusing and locks a lot of what a computer can do away from people.

The thing that excites me about computers is giving people the power to improve their work, remove barriers and work with a machine to create something more than they could do alone.

One of the most exciting possibilities of Large Language Models if that if you can do the logical thinking you can create code from plain english that works.

I’ve used this in a light touch way already to generate complicated database queries. I also used Chat GPT to help me develop a chrome extension to view Spektrix events on our client’s sites, the idea had been in my head for a while but Chat GPT turned it from a long learning journey to achievable in a hacking afternoon.

At the moment some domain specific knowledge to sense check the output is needed but as models improve I think this will be less and less needed.

The current cutting edge of Chat GPT which is currently behind a waitlist includes plugins that allow you to connect it to Zapier or even browse the web, creating the option of an autonomous machine tasked with going out to find information then using it with some particular code that it’s made – terrifying and exciting all at once!

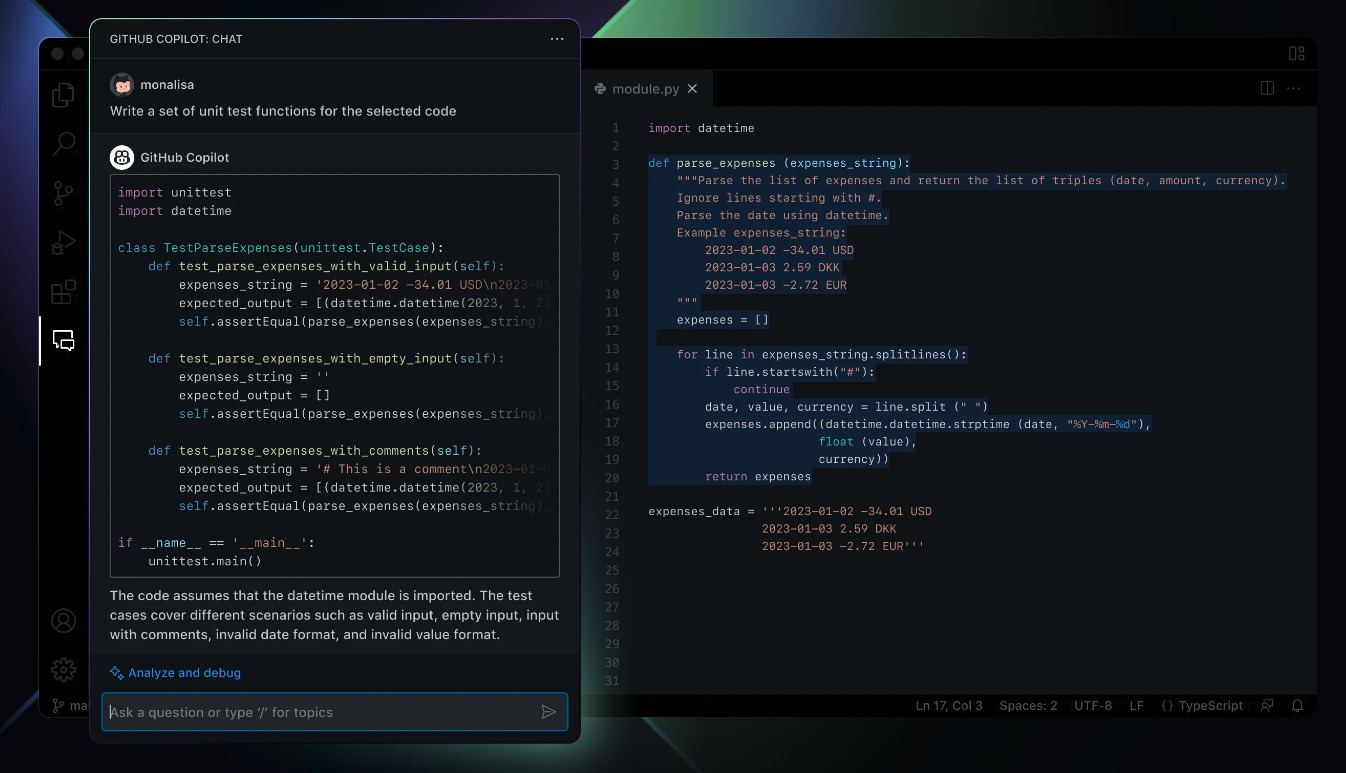

Development: Copilot and Copilot X

A demo image of GitHub Co-Pilot X

A demo image of GitHub Co-Pilot X

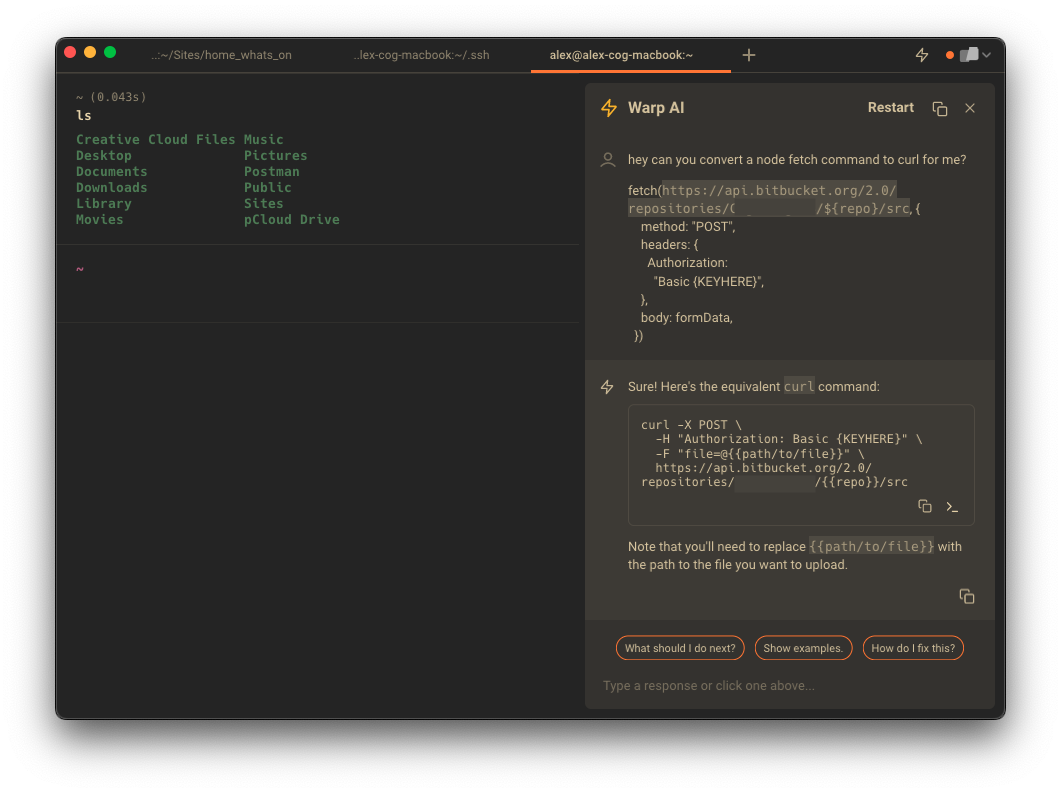

Warp Terminal's AI Assistant

Warp Terminal's AI Assistant

I’ve already talked about how I’ve used Chat GPT to help me with code at work, due to the sheer number of code examples on the internet, along with some more specialised training that Open AI have done, one area LLMs work well is with code.

Last year GitHub (owned by Microsoft who are investing a lot in Open AI) released Co-Pilot an AI assistant, trained on vast amounts of open source code. Copilot can autopredict frequent code patterns and reduce the time developers spend writing out repetitive boilerplate code (you’d be surprised how much of this there is).

Recently GitHub have announced Copilot X which combines this with a Chat like interface that can allow you to seamlessly work alongside AI in your code environment.

I’ve already been testing a terminal tool that allows you to do just this. It can read what you’ve input and make suggestions it’s been really helpful so far.

Both for learning and speeding up development I think these both have great potential when used carefully.

However I’m quite scared of the production code written and understood by potentially nobody that this inevitably will lead to.

All areas: Notes and Summarisation

One of the better ways that I’ve heard someone describe a mental model for how to approach using large language models is to think of them as a calculator for language. (hat tip to Simon Willison on the Changelog podcast)

They really do excel at things like summarisation. For example I gave Chat GPT my Cog Night Review for Daniel Kitson and asked it to write some tweets about the article:

“Daniel Kitson’s Outside: Work in Progress show was an unassuming yet engaging performance that blended comic relief with pandemic anxiety. Alex’s review perfectly captured the mixed feelings and reactions of the crowd, making for a unique and shared global experience.

“Join Alex as he takes us on a journey to the rooftop of Peckham’s Bussey Building for Daniel Kitson’s Outside: Work in Progress show. With stunning views, engaging comedy, and a shared sense of pandemic anxiety, this was a night we won’t soon forget.”

“Alex’s review of Daniel Kitson’s Outside: Work in Progress show perfectly captured the essence of the night – a mix of comic relief and pandemic anxiety. Don’t miss out on his hilarious take on this unforgettable rooftop comedy experience!”

If you’ve read the article you’ll see that it does a very good job of summarising the key points into short tweets.

Another use case I could see would be if you recorded a meeting with a transcription tool like Otter AI and then asked Chat-GPT to summarise the meeting for you. With the prompt of a previous workshop report it might even get started on the report for us!

How it might work for our Clients

Of course many of the applications I’ve just talked about would also work for our, often time stretched and under resourced, clients. I also think there’s a few other things that AI could do for them if they felt comfortable using it to do so.

Content and Text Generation

For instance I can see uses of summarisation for press teams e.g. “here’s a press release please write me 5 tweets and a facebook post”

With clients who are less involved in technology I think avoiding the marketing hype here and world of products that are really a thin layer over Open AI’s APIs will be tricky and in flux for a while yet. This is somewhere we can help I’m sure.

Our clients could also use LLMs for automated human-like communication through a chatbot or even emails. Personalisation would also work well for fundraising teams – if they felt comfortable doing so – “Write an email for James Smith to say thank you for attending these 5 performances this year … insert list of performances” could generate a personalised email with notes specifically about each show that customer had been to see.

Other potential uses of image generation or computer vision

For our museum clients, tools like Midjourney, as well as creating images, can also describe them in natural language – a different way to create captions and alt text for the 1000s of items in their collections. There’s already been several fascinating threads about this and other purposes on the Museums’ Computer Group mailing list.

Avoiding the marketing hype here and world of products that are really a thin layer over Open AI's APIs will be tricky and in flux for a while yet

What’s Next?

As with lots of cutting edge technology, we’re currently very close the code and I think that the real magic will start when these models and tools are integrated into other tools or built upon.

Open AI’s starting work on plugins that allow companies to connect to Chat GPT are an exciting start which we’re only really seeing the start of now.

Or even autonomous AI agents (small computers) that can go out and independently complete goals on the internet – this sounds like science fiction but people are already doing this.

Because I know our digital strategist Nazma will be thinking about this already – there’s the potential for a whole new world of SEO like optimisation designed to catch these models doing this kind of crawling of the internet. (Update – Google announced AI improved search at their 2023 Google IO Conference with AI informed Search Engine Result Pages – SERPs).

Or what about the potential of training personalised models on say all the recorded communication of deceased historical figure to create a chatbot in a museum? Or more scarily a deceased relative?

Closing thoughts

I’ve been quite upbeat and bullish about the new tools I’ve been trying for most of this article. And I’d expect that, I’m excited by new technology and think computers really are magic sometimes. So of course I’m excited when I see something that seems to confirm this.

But I think it’s worth taking a pause here, but hopefully not to end on a negative note.

Almost all the tools we’ve talked about today, whilst hugely exciting are made by a small group of companies in Silicon Valley, whose ultimate aim is to create profit.

Having gathered, borrowed, or stolen? 20+ years of our data and in some cases our work – they’re now selling it back to us as a new tool. Which in the words of the author Ted Chiang is a blurry JPEG of all knowledge online – he says

“OpenAI’s chatbot offers paraphrases, whereas Google offers quotes. Which do we prefer?”

Or more radically put by artist James Bridle in the Guardian:

"The lesson of the current wave of “artificial” “intelligence”, I feel, is that intelligence is a poor thing when it is imagined by corporations. If your view of the world is one in which profit maximisation is the king of virtues, and all things shall be held to the standard of shareholder value, then of course your artistic, imaginative, aesthetic and emotional expressions will be woefully impoverished."

Whilst these tools offer huge opportunity for access, efficiency, learning and much more. They are currently no substitute for critical thought and human emotion, only an imitation of them however useful that might be..

We’re really excited by the possibilities of the tools that will be coming soon to this space and if you want to work with us to use them for good we’ve got over 30 years of helping the arts, heritage and cultural sectors do just that.